XAI(Explainable Artificial Intelligence)

Introduction

The original AI solutions were simple to understand, but in recent years, more complicated decision systems have arisen, such as deep learning. These systems are successful because they combine powerful algorithms with a great amount of data.

parameter space, making its operational procedures difficult to understand. They have the moniker "Black-box models" as a result. Deep learning and machine learning methods are currently widely used in almost every industry.

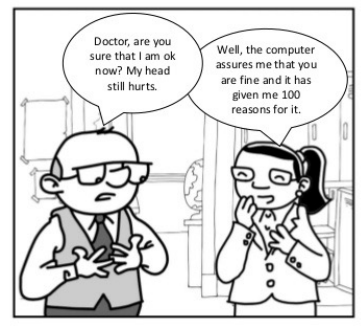

Because artificial intelligence techniques are used in fields that are directly related to human health and life (such as law, finance, etc.), it is frequently questioned whether the predictions made by the model can be relied upon.

The ability of an AI system to provide explanations for its outputs or judgments in a manner that humans can understand is known as explainable artificial intelligence (XAI). As AI systems are used for a wide range of tasks, including autonomous driving, medical diagnosis, credit scoring, and legal decision-making, XAI is becoming more and more significant.

Deep learning models in particular can be extremely complex and challenging to interpret, which is one of the difficulties with modern AI systems. This can result in a lack of openness and faith in these systems, which might limit their use and influence.

By offering insights into how AI systems make decisions, XAI attempts to alleviate this problem. As a result, there may be more openness, less prejudice, and better accuracy and dependability of AI systems. Humans may more accurately assess an AI system's outputs, make knowledgeable decisions, and take suitable actions based on the information presented by knowing how the AI system arrives at a certain decision.

There are many alternative methodologies and strategies for XAI, from model-independent strategies that may be used with any AI system to more specialized strategies that are designed for certain kinds of models or applications. XAI is a busy area of research and development, and as AI systems are used more frequently in our daily lives, its significance is likely to increase.

Understanding the Model Prediction

Understanding model prediction is the capacity to analyze a machine learning model's outputs or judgments in order to obtain understanding of how it makes predictions. This is crucial because it may guide decision-making based on the model's outputs and assist in identifying any biases, flaws, or limitations in the model.

The process of comprehending model predictions may occasionally be rather simple. For instance, it is simple to identify which features are most crucial for making predictions when using a linear regression model because the output is just the weighted sum of the input features.

Understanding model predictions, however, might be more difficult in models that are increasingly complicated, such as deep learning models. Understanding how deep learning models make their predictions can be challenging because of its potentially millions of parameters and intricate, non-linear interactions between input characteristics and outputs.

To better comprehend model predictions, a variety of methodologies can be utilized, from straightforward visualization techniques to more complex ones including attribution methods, saliency maps, and feature importance analyses. These methods can aid in determining which aspects of an input are crucial for creating predictions, which areas of the model are most active for a certain input, and which elements are significantly influencing the model's outputs.

Overall, understanding model predictions is crucial for ensuring that models are accurate, dependable, and transparent. It is a crucial component of machine learning. We can more accurately assess the results of models and make defensible decisions based on the information presented if we have an understanding of how they generate their predictions.

XAI Concepts

- An attribute of a model that enables the user to comprehend its operations without having to delve into its structure and functionalities is understandability.

- The recipient's capacity to comprehend the model's knowledge is known as comprehension.

- The capacity of a model to present algorithms and outcomes in a human-readable format is known as interpretability.

- The model's ability to describe or clarify its mechanisms through appropriate actions is known as explainability.

- Transparency: A model is said to be transparent if the workings of it are completely clear.

XAI Goals

It's crucial to comprehend a few essential ideas in the field of explainable artificial intelligence (XAI). These consist of:

- Understanding how a machine learning model functions and makes its predictions or choices is referred to as interpretability. This is crucial to ensuring that models are clear, comprehensible, and reliable.

- Transparency: The degree of openness and visibility of a machine learning model's internal operations is referred to as transparency. Transparent models are simpler to perceive and comprehend, and they can foster confidence in the model's predictions.

- Explainability is the capacity to offer brief and understandable justifications for a model's conclusions or predictions. This is crucial for enabling people to comprehend and believe in the model's outputs.

- Model-Agnostic Methods: Regardless of the architecture or underlying algorithm of a machine learning model, model-agnostic methods are techniques that can be used. These techniques can be applied to produce explanations for specific predictions or to learn more about the model's general behavior.

- Identifying the input features that are crucial for a model's predictions is done via attribution methods, which are procedures. These techniques can aid in finding model biases and ensuring that the model bases its judgments on pertinent factors.

- Saliency maps are visual depictions that emphasize the areas of an image that are most crucial for a model's predictions. These can be used to discover potential biases or errors and to gain insight into how the model is handling the input data.

- Fairness: The absence of bias or prejudice in a machine learning model is referred to as fairness. Model biases can be found and reduced using XAI approaches, ensuring that the models are impartial and fair.

Target Audience of XAI

All things considered, these ideas are crucial for comprehending the significance of XAI and for creating methods that can make machine learning models more visible, explicable, and reliable.

- Various stakeholders who are involved in the creation, implementation, and use of AI systems make up the target audience of XAI (Explainable Artificial Intelligence). These comprise:

- The development and training of AI models is the responsibility of data scientists and machine learning engineers, who must comprehend how the models operate and how to improve their performance. They can use XAI techniques to gain an understanding of the model's behavior and spot any problems that need to be fixed.

- Business decision-makers and leaders: In order to act sensibly based on the data presented, business decision-makers and leaders must comprehend the outputs and forecasts of AI models.

- They can use XAI techniques to verify that decisions are based on accurate and trustworthy information and to better understand how the model generates its predictions.

- Regulators and policymakers are in charge of ensuring that AI systems are used in an ethical and responsible manner. They can use XAI approaches to find any biases or flaws in AI systems and make sure they adhere to all applicable rules and standards.

- End-users and consumers: In order to make decisions based on the information presented, end-users and consumers must comprehend the outputs and forecasts of AI systems. They can develop trust in the system and gain an understanding of how the model generates its predictions with the use of XAI approaches.

Overall, XAI is significant for a variety of stakeholders involved in the creation, implementation, and application of AI systems. XAI can aid in enhancing transparency, lowering bias, and enhancing the trustworthiness and dependability of AI systems by explaining how AI systems function and how they make their predictions.

Conclusion

Explainable Artificial Intelligence (XAI) is a significant field that focuses on creating approaches and strategies for increasing the openness, interpretability, and reliability of machine learning models. XAI approaches are intended to assist stakeholders in comprehending how AI systems operate, how they make their predictions, and how to make sure they are impartial and fair.

XAI is crucial since it can promote AI system confidence and guarantee that they are utilized morally and responsibly. XAI can aid in enhancing transparency, lowering bias, and enhancing the trustworthiness and dependability of AI systems by giving succinct and explicit explanations of how AI models operate and how they make their predictions.

The significance of XAI will only increase as AI systems become more intricate and incorporated into more areas of our lives. We can make sure that AI systems are utilized responsibly and ethically, and that they ultimately benefit society as a whole, by improving XAI techniques and making them more available to a variety of stakeholders.

References