Deep Learning

In simple words, Deep learning is an artificial intelligence (AI) technology that trains computers to analyze data like that of the human brain. Deep learning models are capable of recognizing complex patterns in images, text, sounds, and other data to generate accurate insights and predictions.

Workflow of Deep Learning Implementation

Workflow of Deep Learning

A deep learning implementation workflow can be broadly separated into the following steps:

- Data Preparation: Gathering and preparing data for deep learning model training and testing. This may entail cleaning and pre-processing the data, dividing it into training and testing sets, and converting it to a model-compatible format.

- Model Selection: Selecting an appropriate deep learning model architecture to tackle the challenge at hand. This can include choosing from a variety of pre-built models or creating a unique architecture.

- Model Training is the process of training the chosen model on the prepared training data. This stage entails configuring the model's hyperparameters, such as the learning rate and batch size, and then performing the training process to update the model's weights and parameters.

- Model Evaluation: Assessing the trained model's performance on testing data. This stage involves assessing the model's capacity to generalize to new data using relevant metrics such as accuracy or F1 score.

- Model optimization is the process of fine-tuning the model's hyperparameters in order to improve its performance on test data. Techniques like regularization, dropout, and learning rate annealing may be used.

- Deployment: Putting the trained model into production so that it can make predictions on new data. This could entail incorporating the model into a bigger software system or developing a stand-alone application.

Implementation

Platform used: Collab

Dataset: Kuzushiji-MNIST

Activation Function: ReLU

Source Code:

# Load the necessary packages

import numpy as np # math operations on arrays

import matplotlib.pyplot as plt # visualizations

import tensorflow as tf # model building and training

import tensorflow_datasets as tfds # data handling

from tensorflow.keras.layers import Dense, Dropout # model components

from tensorflow.keras.models import Sequential # model construction

Description:

This code imports many Python packages for deep learning implementation:

- Numpy (imported as np) is a popular Python library for numerical operations. It implements mathematical operations on arrays and matrices in an efficient manner.

- Matplotlib. pyplot (imported as plt) is a Python plotting library. It is frequently used to visualize data, such as photos, graphs, and charts.

- Google's tensorflow (imported as tf) is an open-source machine learning framework. It offers tools for developing, training, and deploying deep learning models.

- Tensorflow datasets (imported as tfds) are a collection of TensorFlow datasets often used for machine learning projects.

- TensorFlow's Dense and Dropout layers are used to build neural networks. Dense generates a completely connected layer in the neural network, whereas Dropout uses a regularization strategy to minimize overfitting by randomly dropping out some of the neurons during training.

- Sequential is a TensorFlow model constructor that lets you build models by consecutively adding layers. This makes it simple to build feed-forward neural networks in which the output of one layer is the input of the next.

Source Code:

# Load kmnist dataset

(ds, ds_test), info = tfds.load(

'kmnist', # name of the dataset

split=['train', 'test'], # split the dataset into training and test sets

as_supervised=True, # return tuple of (example, label)

shuffle_files=True, # whether shuffle the data before training

with_info=True # to get info about dataset

)

Description

Using the TensorFlow Datasets library (tfds. load() function), this code loads the Kuzushiji-MNIST (KMNIST) dataset and returns two dataset objects (ds and ds test) as well as some metadata (info).

- The tfds.load() function takes various options to configure the dataset loading:

- kmnist: gives the name of the dataset to load, in this case Kuzushiji-MNIST.

- split=['train', 'test']: divides the dataset into two pieces, one for training the model and the other for testing it.

- as supervised=True: specifies that the dataset should be returned as a tuple of (example, label).

- shuffle files=True: specifies whether the data should be shuffled before training the model.

- with info=True: specifies that additional dataset information should be sent together with the dataset. This comprises information such as the number of classes, number of training examples, number of testing examples, etc.

Source Code:

# Run the code to see the labels for the images

print(f'Number of categories: {info.features["label"].num_classes}')

print(f'Names of the categories: {info.features["label"].names}')

Description:

- This function outputs the number of categories in the Kuzushiji-MNIST (KMNIST) dataset as well as their names (which correspond to the labels of the images).

- info. feature is a dictionary-like object that holds information about the dataset, such as feature names and kinds. In this scenario, info. features["label"] refers to the dataset's label feature, which provides the image's category (label).

- The first line of code prints the number of categories (i.e., the number of unique labels) in the dataset using the label feature's num classes property.

- The second line of code prints the names of the categories using the label feature's names property (i.e., the names corresponding to each label). This is helpful in comprehending the significance of the labels in the context of the dataset.

Source Code:

# get the labels of all examples

labels = ds.map(lambda x, y: y).batch(info.splits['train'].num_examples)

print(labels.element_spec)

Description:

- This program extracts the labels for all samples in the Kuzushiji-MNIST (KMNIST) training dataset.

- The ds dataset object represents a TensorFlow dataset that was previously loaded. It is made up of tuples of image examples and their labels. The map() function is applied to the ds dataset object to extract only the labels from the tuples, with a lambda function taking x and y as input (where x is the image example and y is the label) and returning only y. (the label).

- The batch() function is then used to organize the extracted labels into batches, with each batch including labels for the number of instances specified by info. splits['train'].num examples.

- The continuation of the implementation of the source code is below.

Source Code:

# investigate the 'info' variable

print(info)

# Show the figures in the dataset

fig = tfds.show_examples(ds, info)

tfds.as_dataframe(ds_test.take(10), info)

dataset_sample = ds.take(3) # take a sample from the dataset

i = 0

for image, label in tfds.as_numpy(dataset_sample):

i += 1

print(f'Image number {i}:')

print(f'Shape of image: {image.shape}')

print(f'Image min pixel value: {1}') # get image min pixel value

print(f'Image max pixel value: {28}') # get image max pixel value

print(f'Number of different pixel values: {len(np.unique(image))}')

print(f'Image values type: {image.dtype}')

print(f'Label for the image: {label}')

print('#'*30)

Description:

- The above line of the code uses the tfds.show_examples method to display examples of the dataset ds using the dataset information info. This will show some sample dataset labels and images.

- The second line of code uses the tfds.as_dataframe function with the dataset information info to convert a sample of the ds_test dataset to a Pandas DataFrame.

- In the third line of code, a for loop iterates over a sample of size 3 from the ds dataset. It prints out the following details for each picture and label in the sample:

- The picture's number, the form of the picture, the image's smallest pixel value, the image's highest possible pixel value, how many different pixel values there are in the image, the values of the image's data type, the image's caption.

- To visually differentiate the output for each image in the sample, the algorithm prints out a line of 30 hash symbols (#) after each image and label.

# Create validation set. Take 10,000 samples for validation and remove them from the training set

ds_val = ds.take(10000)

ds_train = ds.skip(10000)

# Define the function for image transformation

def normalize_reshape_image(image, label):

image = tf.cast(image, tf.float32) / 255. # fill in the max pixel value of images

image = tf.reshape(image, [image.shape[0]*image.shape[1]]) # fill in the function name to change image into vector

return image, label

Description:

- The first 10,000 samples from the original dataset ds are used to form the validation set ds_val, while the remaining samples are added to the training set ds_train.

- The code also includes the definition of the function normalize_reshape_image, which accepts an image and a label as inputs and outputs the label and the image normalized and flattened.

- The function scales the image's pixel values between 0 and 1 by dividing them by 255 before converting the image to a float32 data type.

- Using the tf.reshape function and the image's size, the image is then reshaped into a 1D vector.shape[0]*image.shape[1].

- The flattened and normalized image as well as its matching label are then returned by the function.

# Training set

ds_train = ds.map(normalize_reshape_image, num_parallel_calls=tf.data.experimental.

AUTOTUNE) # map the normalize_reshape_image function to each image

ds_train = ds_train.cache()

ds_train = ds_train.shuffle(len(list(ds_train)))

ds_train = ds_train.batch(128)

ds_train = ds_train.prefetch(tf.data.experimental.AUTOTUNE)

# validation set - make the same transformations for the validation set

ds_val = ds.map(normalize_reshape_image, num_parallel_calls=tf.data.experimental.

AUTOTUNE) # map the normalize_reshape_image function to each image

ds_val = ds_train.cache()

ds_val = ds_train.shuffle(len(list(ds_train)))

ds_val = ds_train.batch(128)

ds_val = ds_train.prefetch(tf.data.experimental.AUTOTUNE)

# Transform the test set

ds_test = ds.map(normalize_reshape_image, num_parallel_calls=tf.data.experimental.

AUTOTUNE) # map the normalize_reshape_image function to each image

ds_test = ds_test.batch(128)

ds_test = ds_test.cache()

ds_test = ds_test.prefetch(tf.data.experimental.AUTOTUNE)

Description:

- The training, validation, and test sets of the dataset are undergoing the following changes by the code:

- Each image is assigned the normalize_reshape_image function using the ds.map() technique.

- To prevent having to reload the data from disk in the following epoch, the resulting dataset is then cached.

- Random shuffle of the dataset is performed with a buffer size of len(list(ds_train)).

- The dataset is compressed into 128-batch batches.

- To enhance performance, the dataset is prefetched using tf.data.experimental.AUTOTUNE.

- Each image is assigned the normalize_reshape_image function using the ds.map() technique.

- To prevent having to reload the data from disk in the following epoch, the resulting dataset is then cached.

- Random shuffle of the dataset is performed with a buffer size of len(list(ds_train)). Instead of len(list(ds_train)), it should have been len(list(ds_val)).

- The dataset is compressed into 128-batch batches.

- To enhance performance, the dataset is prefetched using tf.data.experimental.AUTOTUNE.

- Each image is assigned the normalize_reshape_image function using the ds.map() technique.

- The dataset is compressed into 128-batch batches.

- To prevent having to reload the data from disk in the following epoch, the dataset is cached.

- To enhance performance, the dataset is prefetched using tf.data.experimental.AUTOTUNE.

# note that for the test set there is no 'shuffle' method and 'cache' is after 'batch'

example_batch = ds_val.take(3)

i = 0

for images, labels in tfds.as_numpy(example_batch):

i += 1

print(f'Batch number {i}:\n')

print(f'Number of images in the batch: {images.shape[0]}')

print(f'Shape of data in the batch: {images.shape[1:]}')

print(f'Number of features: {images.shape[1:][0]}')

print(f'Images min pixel value: {images.min()}')

print(f'Images max pixel value: {images.max()}')

print(f'Image values type: {images.dtype}')

print(f'Labels for images in the batch: {labels}')

print('#'*30)

Description:

- The code (ds_val.take(3)) selects a sample from the validation set and outputs some details about the collection of labels and images in the sample:

- The code loops through the sample's photos and labels, printing a batch at a time as follows the lot number.

- The batch's total number of images (images. shape[0]).

- The batch's data's shape is identical to the shapes of all of the images (images. shape[1:]).

- The number of features (images. shape[1:][0]), is equal to the number of pixels in each image.

- Images. min() and images.max() returns the images' minimum and maximum pixel values, respectively.

- The image values' data type (images. dtype).

- The labels (labels) for the photos in the batch.

# Define your model. The activation function for each Dense layer should be 'relu'

(except the last one)

model = Sequential()

# fill in the number of features in each batch

model.add(Dense(512, input_dim=784, activation='relu'))

model.add(Dense(256, activation='relu'))

model.add(Dense(128, activation='relu'))

model.add(Dense(64, activation='relu')) # add Dense layer with 64 neurons

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax')) # fill in the number of categories

Description:

- The we define the model and we assignt he activation function for the each Dense layer i.e 'relu'and the last line activation function is the 'softmax' which is used for generating the output of the Model.

# Summary of the model.

model.summary()

Obtained Output:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 512) 401920

dense_1 (Dense) (None, 256) 131328

dense_2 (Dense) (None, 128) 32896

dense_3 (Dense) (None, 64) 8256

dropout (Dropout) (None, 64) 0

dense_4 (Dense) (None, 10) 650

=================================================================

Total params: 575,050

Trainable params: 575,050

Non-trainable params: 0

_______________________________

# Compile the model.

model.compile(

loss=tf.keras.losses.SparseCategoricalCrossentropy(),

optimizer=tf.keras.optimizers.Adam(learning_rate=0.001),

metrics=['accuracy'],

)

# Train the model. Set epochs to 15

history = model.fit(

ds_train,

epochs=15,

validation_data=ds_val

)

Obtained Output:

Epoch 1/15

469/469 [==============================] - 16s 32ms/step - loss: 0.5261 - accuracy: 0.8462 - val_loss: 0.1847 - val_accuracy: 0.9465

Epoch 2/15

469/469 [==============================] - 15s 32ms/step - loss: 0.2030 - accuracy: 0.9460 - val_loss: 0.1226 - val_accuracy: 0.9634

Epoch 3/15

469/469 [==============================] - 15s 33ms/step - loss: 0.1309 - accuracy: 0.9651 - val_loss: 0.0746 - val_accuracy: 0.9775

Epoch 4/15

469/469 [==============================] - 14s 30ms/step - loss: 0.0990 - accuracy: 0.9737 - val_loss: 0.0577 - val_accuracy: 0.9834

Epoch 5/15

469/469 [==============================] - 14s 30ms/step - loss: 0.0722 - accuracy: 0.9810 - val_loss: 0.0428 - val_accuracy: 0.9872

Epoch 6/15

469/469 [==============================] - 14s 29ms/step - loss: 0.0624 - accuracy: 0.9827 - val_loss: 0.0362 - val_accuracy: 0.9895

Epoch 7/15

469/469 [==============================] - 11s 24ms/step - loss: 0.0500 - accuracy: 0.9865 - val_loss: 0.0243 - val_accuracy: 0.9924

Epoch 8/15

469/469 [==============================] - 14s 30ms/step - loss: 0.0442 - accuracy: 0.9883 - val_loss: 0.0230 - val_accuracy: 0.9931

Epoch 9/15

469/469 [==============================] - 14s 30ms/step - loss: 0.0373 - accuracy: 0.9899 - val_loss: 0.0237 - val_accuracy: 0.9927

Epoch 10/15

469/469 [==============================] - 17s 36ms/step - loss: 0.0347 - accuracy: 0.9906 - val_loss: 0.0215 - val_accuracy: 0.9936

Epoch 11/15

469/469 [==============================] - 11s 24ms/step - loss: 0.0258 - accuracy: 0.9930 - val_loss: 0.0185 - val_accuracy: 0.9946

Epoch 12/15

469/469 [==============================] - 13s 28ms/step - loss: 0.0307 - accuracy: 0.9921 - val_loss: 0.0095 - val_accuracy: 0.9970

Epoch 13/15

469/469 [==============================] - 12s 25ms/step - loss: 0.0183 - accuracy: 0.9948 - val_loss: 0.0167 - val_accuracy: 0.9955

Epoch 14/15

469/469 [==============================] - 14s 29ms/step - loss: 0.0248 - accuracy: 0.9935 - val_loss: 0.0110 - val_accuracy: 0.9966

Epoch 15/15

469/469 [==============================] - 13s 28ms/step - loss: 0.0214 - accuracy: 0.9944 - val_loss: 0.0136 - val_accuracy: 0.9960

[loss, acc] = model.evaluate(ds_test)

print("Model accuracy is equal to {0:.2f}%".format(acc*100)) #Print the accuracy of the model

Obtained Output:

469/469 [==============================] - 6s 13ms/step - loss: 0.0136 - accuracy: 0.9960

Model accuracy is equal to 99.60%

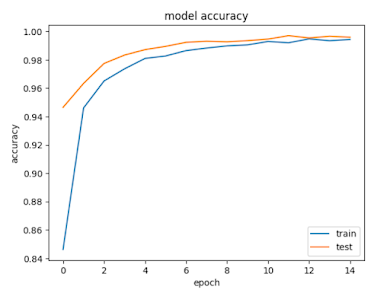

# History for accuracy

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='lower right')

plt.show()

# History for loss

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper right')

plt.show()

Obtained Output:

Key Points to Remember

- In the deep learning pipeline, keep in mind the following important details:

- For your deep learning challenge, gather and create an appropriate dataset.

- Cleanse, normalize, and prepare the data for training by changing it into the appropriate format.

- Choosing a model.

- Depending on the issue at hand, select a suitable deep-learning architecture.

- Think of well-known architectures for image-related tasks like convolutional neural networks (CNNs), recurrent neural networks (RNNs), or transformer models for natural language processing.

- Consider the dataset's size, the problem's complexity, and the computational resources that are available.

- The dataset should be divided into training, validation, and test sets.

- Establish a loss function that gauges the difference between expected and actual results after setting the model's parameters to initial values.

- Update the model parameters iteratively by using adaptive optimizers (such as Adam) or stochastic gradient descent (SGD) optimization methods.

- In accordance with the model's performance on the validation set, tweak hyperparameters (such as learning rate and batch size).

- Regularize the model to avoid overfitting by utilizing methods such as dropout, L1/L2 regularization, or data augmentation.

- Use the test set as a fair indicator of performance to evaluate the training model.

- Depending on the assignment, use the relevant evaluation measures, such as accuracy, precision, recall, F1 score, or mean squared error.

- To understand the model's strengths and weaknesses, analyze and interpret the model's performance.

- Adjust the model's hyperparameters or, if necessary, change the architecture based on the evaluation's findings.

- Improve the performance of the model by iterating through the training, evaluating, and fine-tuning phases until satisfactory results are obtained.

- Once the model has been tested and trained, integrate it into your application or make it available in a production environment.

- Consider issues including model size, computing power, and latency needs as you optimize the model for effective inference.

- Keep track of the model's performance in the installed system, analyze it, and change it as necessary.

- To get the desired results, keep in mind that the deep learning workflow is not a linear process and you may need to go back and iterate through various phases. The workflow must also take ethical considerations in data gathering and model usage, as well as understanding and interpreting the model's output, into account.

Conclusion

The Model has 99.60% accuracy, which means it successfully categorized 99.60% of the photos in the test set. This is a good result for the KMNIST dataset, which has ten different types of handwritten Japanese characters.

The output message also includes the loss value of 0.0136, which is the objective function value that the model is attempting to minimize throughout training. A lower loss number shows that the model can match the training data and generalize to new data better.

Ultimately, this implementation demonstrates how to design and train a deep learning model on a real-world dataset using TensorFlow, as well as how to evaluate the model's performance on a test set.

References

[1] Wikipedia.com